Pinch me, I'm zooming: gestures in the DOM

Interpreting multi-touch user gestures on the web is not as straightforward as you'd imagine.

In this article we look at how current major browsers behave (and misbehave) in regards to gestures, and piece together a workable solution that uses wheel, touch, and gesture DOM events.

Table of contents

Anatomy of a gesture

Two-finger gestures on touchscreens and modern trackpads allow users to manipulate on-screen elements as if they were physical objects: to move them and spin them around, to bring them closer or push them further away. Such a gesture encodes a combination of translation, uniform scaling, and rotation to apply to the target element. This is also known as an (affine) linear transformation.

The impression of direct manipulation is created when the transformation maps naturally to the movement of the touchpoints. There are ideas for novel ways to interpret a gesture, but operating systems have settled on a mapping which keeps the parts you touch underneath your fingertips throughout the gesture. This is the approach we're most familiar with, and the one we will explore in this article.

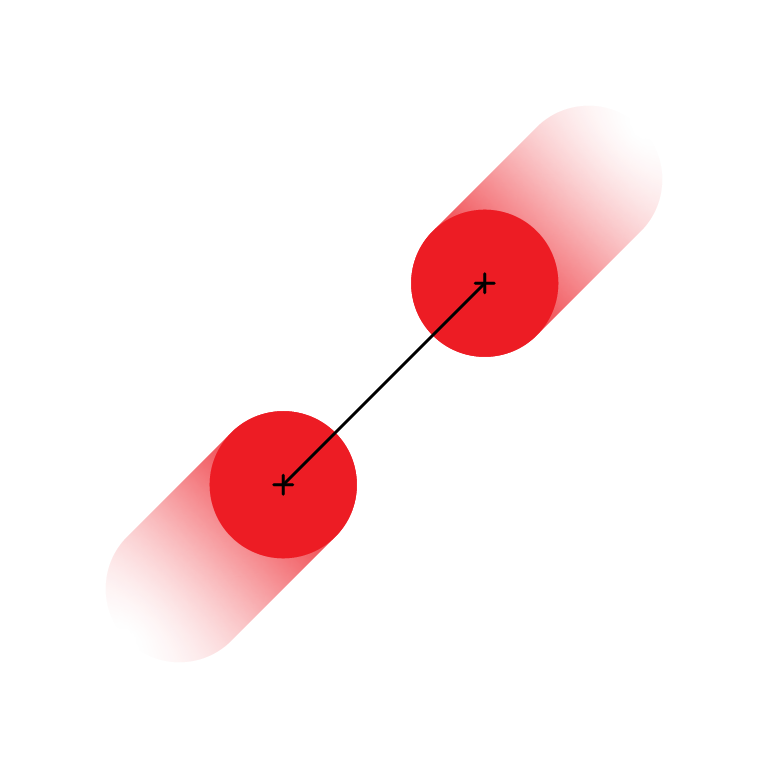

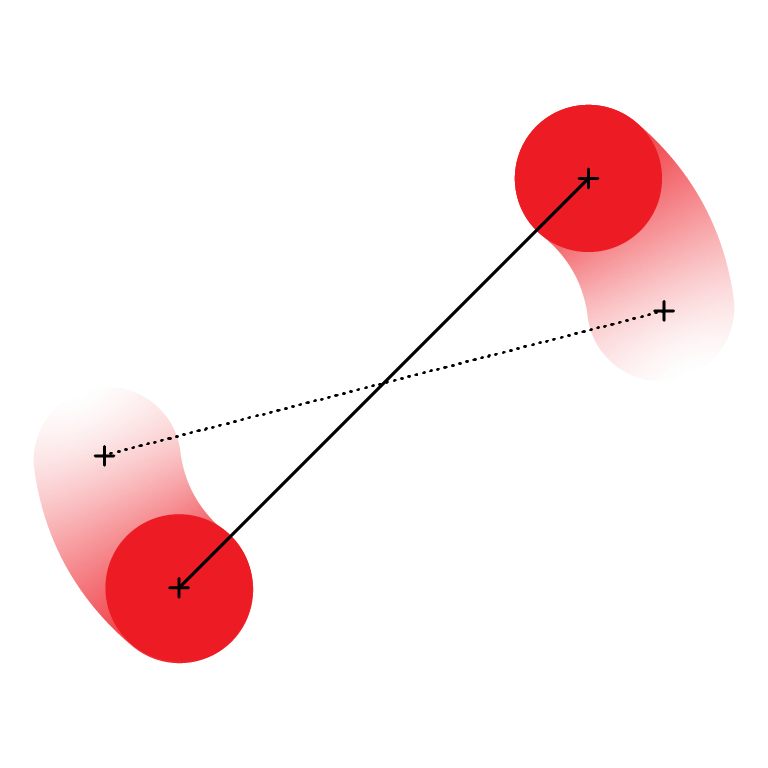

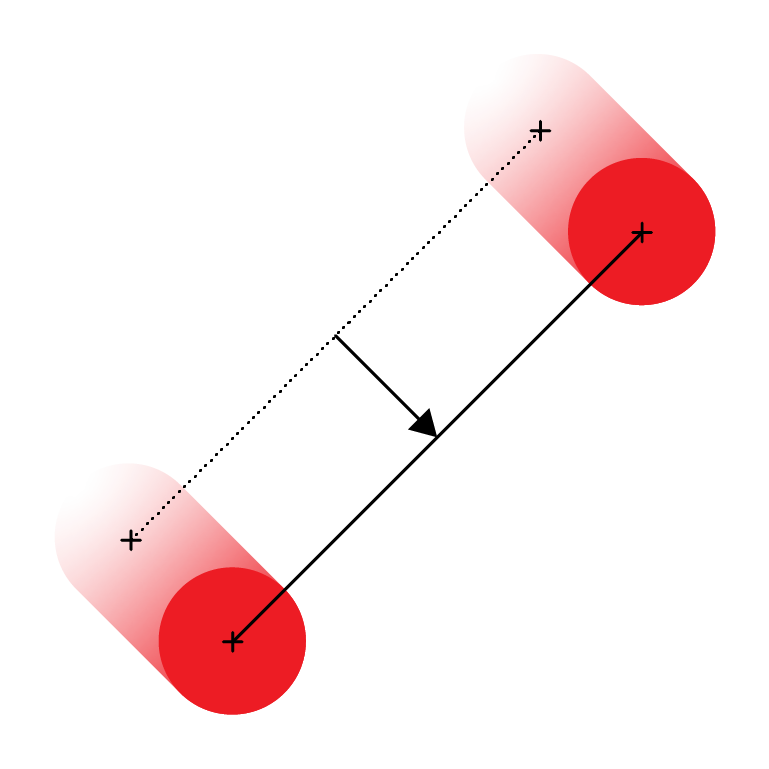

Let's see how a two-finger gesture maps to the basic components of a linear transformation.

Scale. The change in distance between the two touchpoints throughout the gesture dictates the scale: if the fingers are brought together to half the initial distance, the object should be made half its original size.

Rotation. The slope defined by the two touchpoints dictates the rotation to be applied to the object.

Origin and translation. The midpoint, located halfway between the two touchpoints, has a double role: its initial coordinates establish the transformation origin, and its movement throughout the gesture translates the object accordingly.

Native applications on touch-enabled devices have to access to high-level APIs that provide the translation, scale, rotation, and origin of a user gesture directly. On the web, we have to glue together several types of events to get a similar results across a variety of platforms.

The relevant DOM events: wheel, touch, and gesture

A WheelEvent is triggered when the user intends to scroll an element with the mousewheel (from which the interface takes its name), a separate "scroll area" on older trackpads, or the entire surface area of newer trackpads with the two-finger vertical movement.

Wheel events have deltaX, deltaY, and deltaZ properties to encode the displacement dictated by the input device, and a deltaMode to establish the unit of measurement:

deltaMode |

Value | Meaning |

|---|---|---|

DOM_DELTA_PIXEL |

0 |

scroll a precise amount of pixels |

DOM_DELTA_LINE |

1 |

scroll by lines |

DOM_DELTA_PAGE |

2 |

scroll by entire pages |

As pinch gestures on trackpads became more commonplace, browser implementers needed a way support them in desktop browsers. Kenneth Auchenberg, in his article on detecting multi-touch trackpad gestures, brings together key pieces of the story.

In short, Chrome settled on an approach inspired by Internet Explorer: encode pinch gestures as wheel events with ctrlKey: true, and the deltaY property holding the proposed scale increment. Firefox eventually did the same, and with Microsoft Edge recently having switched to Chromium as its underlying engine, we have a "standard" of sorts. I use scare-quotes because, as we'll see in the next section, some aspects don't quite match across browsers.

Sometime between Chrome and Firefox adding support for pinch-zoom, Safari 9.1 brought its very own GestureEvent, which exposes precomputed scale and rotation properties, to the desktop.

To this day, Safari remains the only browser that implements GestureEvent, even among browsers on touch-enabled platforms. Instead, mobile browsers produce the lower-level TouchEvents, which encode the positions of individual touchpoints in a gesture. They allow us, with a bit more effort than is required with higher-level events, to compute all the components of the linear transformation ourselves.

Whereas WheelEvent only encodes scale, and GestureEvent includes scale and rotation, TouchEvent lets us capture the translation as well, giving us finer-grained control over how we interpret the gesture.

Combined wheel, gesture and touch events seem sufficient to handle two-finger gestures across a variety of platforms. Let's see how this intuition, *ahem*, pans out.

Putting browsers to the test

I've put together a test page that logs relevant properties of all the wheel, gesture, and touch events it captures:

I've tested a series of scrolls and pinches in recent versions of Firefox, Chrome, Safari, and Edge (Chromium-based), on a variety of devices I managed to procure for this purpose:

- a MacBook Pro (macOS Big Sur);

- a Surface Laptop with a touchscreen and built-in precision touchpad (Windows 10);

- an ASUS notebook with a non-precision touchpad (Windows 10);

- an iPhone (iOS 14);

- an iPad with a keyboard (iPadOS 14); and

- an external mouse to connect to all laptops.

Let's dig into a few of the results, and how they inform our solution.

Results on macOS

When you perfrom a pinch-zoom gesture, Firefox and Chrome produce a wheel event with a deltaY: ±scale, ctrlKey: true. They produce an identical result when you scroll normally with two fingers while physically pressing down the Ctrl key, with the difference that the latter is subject to inertial scrolling.

Safari reacts to the proprietary gesturestart, gesturechange, and gestureend events and produces a precomputed scale and rotation.

In all browsers, clientX and clientY, as well as the position of the on-screen cursor, remain constant throughout two-finger gestures. This pair of coordinates establishes the gesture origin.

In the process of testing various modifier keys, I noted some default browser behaviors that you'll likely need to deflect with event.preventDefault():

- Option + wheel in Firefox navigates (or rather flies) through the browser history; this would make sense for discrete steps on a mousewheel, but feels too weird to be useful on an inertial trackpad.

- Command + wheel in Firefox zooms in and out of the page, similarly to the Command + and Command - keyboard shortcuts;

- Pinching inwards in Safari minimizes the tab into a tab overview screen.

External, third-party mice are a different matter. Instead of the smooth pixel increments on the trackpad, the mouse's wheel jumps entire lines at a time. (The Scrolling speed setting in System Preferences > Mouse controls how many.)

Accordingly, Firefox shows deltaY: ±1, deltaMode: DOM_DELTA_LINE for a tick of the wheel. This is the first, and at least on macOS the only, encounter with DOM_DELTA_LINE. Chrome and Safari stick with deltaMode: DOM_DELTA_PIXEL and a much larger deltaY, sometimes hundreds of pixels at a time. This is an instance of the many more pixels than expected deviation of which we'll see more throughout the test session. A basic pinch-zoom implementation that doesn't account for this quirk will zoom in and out in large, hard-to-control strides when you use the mousewheel.

In all three browsers, deltaX is normally zero. Holding down the Shift key, a common way for users of an external mouse to scroll horizontally, swaps deltas and deltaY becomes zero instead.

Results on Windows

A precision touchpad works on Windows similarly to the Magic Trackpad on macOS: Firefox, Chrome, and Edge produce results comparable to what we've seen on macOS. The quirks emerge with non-precision touchpads and external mice, however.

On Windows, the wheel of an external mouse has two scroll modes: either L lines at a time (with a configurable L), or a whole page at a time.

When you use the external mouse with line-scrolling, Firefox produces the expected deltaY: ±L, deltaMode: DOM_DELTA_LINE. Chrome generates deltaY: ±L * N, deltaMode: DOM_DELTA_PIXEL, where N is a multiplier dictated by the browser, and which varies by machine: I've seen 33px on the ASUS laptop and 50px on the Surface. (There's probably an inner logic to what's going on, but it doesn't warrant further investigation at this point.) Edge produces deltaY: ±100, deltaMode: DOM_DELTA_PIXEL, so 100px regardless on the number of lines L that the mouse is configured to scroll. With page-scrolling, browsers uniformly report deltaY: ±1, deltaMode: DOM_DELTA_PAGE. None of the three browsers support holding down the Shift to reverse the scroll axis of the mousewheel.

On non-precision touchpads, the effect of scrolling on the primary (vertical) axis will mostly be equivalent to that of a mousewheel. The behavior of the secondary (horizontal) axis will not necessarily match it. At least on the machines on which I performed the tests, mouse settings also apply to the touchpad, even when there was no external mouse attached.

In Firefox, in line-scrolling mode, scrolls on both axes produce deltaMode: DOM_DELTA_LINE with deltaX and deltaY, respectively, containing a fraction of a line; a pinch gesture produces a constant deltaY: ±L, deltaMode: DOM_DELTA_LINE, ctrlKey: true. In page-scrolling mode, scrolls on the primary axis produce deltaMode: DOM_DELTA_PAGE, while on the secondary axis it remains in deltaMode: DOM_DELTA_LINE; the pinch gesture produces deltaY: ±1, deltaMode: DOM_DELTA_PAGE, ctrlKey: true. In Chrome, a surprising result is that when you scroll on the secondary axis you get deltaX: 0, deltaY: N * ±L, shiftKey: true. Otherwise, the effects seen with a non-precision touchpad on Windows are of the unexpected deltaMode or unexpected deltaY value varieties.

Unifying wheel, touch, and gesture events

The API that unifies wheel, touch, and gesture events should look a lot like Safari's GestureEvent. We will model it around three callbacks:

startGesture(gesture), invoked at the beginning of the transformation;doGesture(gesture), invoked continously throughout the transformation;endGesture(gesture), invoked at the end of the transformation.

The gesture object should describe the transformation completely:

{

origin: { x: …, y: … },

translation: { x: …, y: … },

scale: …,

rotation: …

}Let's see how each type of event maps to this setup.

Processing Safari's gesture events

GestureEvents are the most straightforward to map, so we'll start with those.

We pick scale and rotation properties directly from the event object, and fill in the origin based on the mouse coordinates. Since these types of events don't include a translation, we assume there to be no translation, that is translation: { x: 0, y: 0 }.

/* Handle Safari GestureEvents */

let gesture = false;

container.addEventListener('gesturestart', e => {

if (e.cancelable !== false) {

e.preventDefault();

}

if (!gesture) {

startGesture({

translation: { x: 0, y: 0 },

scale: e.scale,

rotation: e.rotation,

origin: { x: e.clientX, y: e.clientY }

});

gesture = true;

}

}, { passive: false });

container.addEventListener('gesturechange', e => {

if (e.cancelable !== false) {

e.preventDefault();

}

if (gesture) {

doGesture({

translation: { x: 0, y: 0 },

scale: e.scale,

rotation: e.rotation,

origin: { x: e.clientX, y: e.clientY }

});

}

}, { passive: false });

container.addEventListener('gestureend', e => {

if (gesture) {

endGesture({

translation: { x: 0, y: 0 },

scale: e.scale,

rotation: e.rotation,

origin: { x: e.clientX, y: e.clientY }

});

gesture = false;

}

});An occasional misfire where the gestureend event is emitted twice at the end of a gesture (WebKit#233137) is worked around with the gesture flag.

Safari also has a built-in pinch to show all tabs gesture which normally gets canceled when we call preventDefault() on the gesture events. However, when the user combines a scale and rotation gesture, the browser behavior will be triggered nonetheless (WebKit#233141). I don't have a workaround yet, but would love to know if there is one.

Sidenote: Passive event listeners

It used to be the case that we could preventDefault() most events from any listener. This forces the browser to always be ready to have its default behavior overriden, which in some situations (scrolling, touch events) leads to less than ideal performance.

To mitigate this, the passive flag was introduced as an option for addEventListener(). To be able to prevent the default browser behavior, you'd need to register an active event listener by sending in the { passive: false } option.

For backwards compatibility, events are active by default. But, in some browsers for some performance-critical contexts, they default to being passive. To make sure our event listeners work as expected (now, and going forwaRD), throughout the code samples we use { passive: false } explicitly whenever we want to call preventDefault(), first checking that the event is cancelable.

Starting with Safari 15, macOS Safari also emits wheel events for the pinch-zoom gesture (WebKit#225788). If you're only interested in scale transformations, in the future you'll be able listen to wheel events, as shown in the next section, instead of relying on the non-standard GestureEvent.

Converting wheel events to gestures

For wheel events, we have a few things to figure out:

- How to normalize the various ways browsers emit

wheelevents into an uniform delta value. - How to map the occurrence of

wheelevents tostartGesture,doGesture, andendGesture. - How to compute the

scalevalue from the delta.

Let's explore each sub-problem one by one.

1. Normalizing wheel events

Our goal here is to implement a normalizeWheelEvent function as described below:

/*

Normalizes WheelEvent `e`,

returning an array of deltas `[dx, dy]`.

*/

function normalizeWheelEvent(e) {

let dx = e.deltaX;

let dy = e.deltaY;

// TODO: normalize dx, dy

return [dx, dy];

}This is where we can put our experimental browser data to good use. Let's recap some findings relevant to normalizing wheel events.

The browser may emit deltaX: 0, deltaY: N, shiftKey: true when scrolling horizontally. We want to interpret this as deltaX: N, deltaY: 0 instead:

// swap `dx` and `dy`:

if (dx === 0 && e.shiftKey) {

[dx, dy] = [dy, dx];

}Furthermore, the browser may emit values in a deltaMode other than pixels; for each, we need a multiplier:

if (e.deltaMode === WheelEvent.DOM_DELTA_LINE) {

dx *= 8;

dy *= 8;

} else if (e.deltaMode === WheelEvent.DOM_DELTA_PAGE) {

dx *= 24;

dy *= 24;

}The choice of multipliers ultimately depends on the application. We might take inspiration from browsers themselves or other tools the user may be familiar with; a document viewer may respect the mouse configuration to scroll one page at a time; map-pinching, on the other hand, may benefit from smaller increments.

Finally, a browser may not emit DOM_DELTA_LINE or DOM_DELTA_PAGE where the input device settings dictate them, and instead offer a premultiplied value in DOM_DELTA_PIXELs. This value is often very large, 100px or more at a time.

Why would browsers do that? With a whole lot of code out there that fails to look at the deltaMode, minuscule DOM_DELTA_LINE / DOM_DELTA_PAGE increments that get incorrectly interpreted as pixels would make for lackluster scrolls. Browsers can be excused for trying to give a helping hand, but premultiplied pixel values — often computed in a way that only works if you think of wheel events as signifying scroll intents — makes them harder to use for other purposes.

Thankfully, even in the absence of a more sophisticated approach, simply setting the upper limit of deltaY to something reasonable (e.g. 24px), just to pull the breaks on wild zooms, can go a long way towards improving the experience:

/*

Note: we're using `Math.sign()` and `Math.min()`

to impose a maximum on the *absolute* value

of a possibly-negative number.

*/

dy = Math.sign(dy) * Math.min(24, Math.abs(dy));These few adjustments should cover a vast array of variations across browsers and devices. Yay compromise!

2. Generating gesture events from wheel events

With normalization out of the way, the next obstacle is that wheel events are individual occurrences, for which we must devise a "start" and "end" if we want to call startGesture and endGesture.

The first wheel event marks the beginning of a gesture. But what about the end? In line with keeping things simple, we consider a gesture done once a number of milliseconds pass since the last wheel event. An outline for batching wheel events into gestures is listed below:

let timer;

let gesture = null;

container.addEventListener('wheel', function(e) {

if (e.cancelable !== false) {

e.preventDefault();

}

/*

If we don't have a `gesture`, it means

we're just starting a batch of `wheel` events.

*/

if (!gesture) {

gesture = { … };

startGesture(gesture);

}

/*

Perform the current wheel gesture

*/

gesture = { … };

doGesture(gesture);

if (timer) {

window.clearTimeout(timer);

}

/*

When the time runs out, call `endGesture`.

*/

timer = window.setTimeout(function() {

endGesture(gesture);

gesture = null;

}, 200); // timeout in milliseconds

}, { passive: false });The actual gesture arguments to startGesture, doGesture, and endGesture functions are explored in the next section.

3. Converting the wheel delta to a scale

In Safari, a gesturechange event's scale property holds the accumulated scale to apply to the object at each moment of the gesture:

final_scale = initial_scale * event.scale;In fact, the documentation for the UIPinchGestureRecognizer which native iOS apps use to detect pinch gestures, and which works similarly to Safari's GestureEvent, emphasizes this aspect:

Important: Take care when applying a pinch gesture recognizer’s scale factor to your content, or you might get unexpected results. Because your action method may be called many times, you cannot simply apply the current scale factor to your content. If you multiply each new scale value by the current value of your content, which has already been scaled by previous calls to your action method, your content will grow or shrink exponentially. Instead, cache the original value of your content, apply the scale factor to that original value, and apply the new value back to your content. Alternatively, reset the scale factor to 1.0 after applying each new change.

On the other hand, pinch gestures encoded as wheel events contain deltas that correspond to percentual changes in scale that you're supposed to apply incrementally. Negative deltas increase the object's scale, while positive deltas dicrease the object's scale. For example:

delta: 10shrinks the object by10%delta: -20enlarges the object by20%

A single formula covers both cases:

let scale = previous_scale * (1 - delta / 100);Accumulating a series of deltas d1, d2, …, dN into a final scaling factor requires some back-of-the-napkin arithmetics. The intermediary scales…

let scale1 = initial_scale * (1 - d1/100);

let scale2 = scale1 * (1 - d2/100);

let scale3 = scale2 * (1 - d3/100);

// …and so on…lead us to the formula for the final scale:

let factor = (1 - d1/100) * (1 - d2/100) * … * (1 - dN/100);

let final_scale = initial_scale * factor;This lets us flesh out the scale we're supposed to send to our startGestue, doGesture and endGesture functions we introduced in the previous section:

let timer;

let gesture = null;

container.addEventListener('wheel', function(e) {

if (e.cancelable !== false) {

e.preventDefault();

}

let [dx, dy] = normalizeWheel(e);

if (!gesture) {

gesture = {

origin: { x: e.clientX, y: e.clientY },

scale: 1,

translation: { x: 0, y: 0 }

};

startGesture(gesture);

}

if (e.ctrlKey) {

// pinch-zoom gesture

let factor = (1 - dy / 100);

gesture = {

origin: { x: e.clientX, y: e.clientY },

scale: gesture.scale * factor,

translation: gesture.translation

};

} else {

// pan gesture

gesture = {

origin: { x: e.clientX, y: e.clientY },

scale: gesture.scale,

translation: {

x: gesture.translation.x - dx,

y: gesture.translation.y - dy

}

};

}

doGesture(gesture);

if (timer) {

window.clearTimeout(timer);

}

timer = window.setTimeout(function() {

if (gesture) {

endGesture(gesture);

gesture = null;

}

}, 200);

}, { passive: false });This approach will get us scale values in the same ballpark for WheelEvent and GestureEvent, but you'll notice pinches in Firefox and Chrome effect a smaller scale factor than similar gestures in Safari. We can solve this by mixing in a SPEEDUP multiplier that makes up for the difference:

/*

Eyeballing it suggests the sweet spot

for SPEEDUP is somewhere between

1.5 and 3. Season to taste!

*/

const WHEEL_SCALE_SPEEDUP = 2.5;

let factor = (1 - (WHEEL_SCALE_SPEEDUP * dy) / 100);As Stephane points out over email, there's one final touch-up we could make that involves the symmetry of negative and positive dy values: when you shrink an object by 20%, you can't bring it back to its original scale by enlarging it by 20%.

That wheel events for pinching in and out don't add up to zero is an unfortunate side-effect of how browsers have implemented the pinch-to-wheel feature. To work around the asymmetry we can adjust how we shrink the object by N%

to mean shrink it by X% so that enlarging it by N% would restore the original scale

. Solving the equation for X:

(1 - X/100) * (1 + N/100) = 1

…gives us the adjusted factor for positive values of dy:

let factor = dy <= 0 ?

1 - (WHEEL_SCALE_SPEEDUP * dy) / 100 :

1 / (1 + (WHEEL_SCALE_SPEEDUP * dy) / 100);The listing below contains the final code for handling wheel events, let's see what we can do about touch events next.

Full listing for the wheel handling code

const WHEEL_SCALE_SPEEDUP = 2;

const WHEEL_TRANSLATION_SPEEDUP = 2;

let gesture = false;

let timer;

container.addEventListener('wheel', function(e) {

if (e.cancelable !== false) {

e.preventDefault();

}

let [dx, dy] = normalizeWheel(e);

if (!gesture) {

gesture = {

origin: { x: e.clientX, y: e.clientY },

scale: 1,

translation: { x: 0, y: 0 }

};

startGesture(gesture);

}

if (e.ctrlKey) {

// pinch-zoom

let factor = dy <= 0 ?

1 - (WHEEL_SCALE_SPEEDUP * dy) / 100 :

1 / (1 + (WHEEL_SCALE_SPEEDUP * dy) / 100);

gesture = {

origin: { x: e.clientX, y: e.clientY },

scale: gesture.scale * factor,

translation: gesture.translation

};

} else {

// panning

gesture = {

origin: { x: e.clientX, y: e.clientY },

scale: gesture.scale,

translation: {

x: gesture.translation.x - WHEEL_TRANSLATION_SPEEDUP * dx,

y: gesture.translation.y - WHEEL_TRANSLATION_SPEEDUP * dy

}

};

}

doGesture(gesture);

if (timer) {

window.clearTimeout(timer);

}

timer = window.setTimeout(function() {

if (gesture) {

endGesture(gesture);

gesture = null;

}

}, 200);

}, { passive: false });Converting touch events to gestures

Touch events are more low-level. They contain everything we need to derive each component of the linear transformation ourselves. Each individual touchpoint is encoded in the event.touches list as a Touch object containing, among others, its coordinates clientX and clientY.

Emitting gesture-like events

The four touch events are touchstart, touchmove, touchend and touchcancel.

We want to map these to the startGesture, doGesture and endGesture callbacks.

Each individual touch produces a touchstart event on contact and a touchend event when lifted from the touchscreen. The touchcancel event is emitted when the browser wants to bail out of the gesture (for example, when adding too many touchpoints to the screen).

For our purpose we want to observe gestures involving exactly two touchpoints, and we use the same function watchTouches for all three events.

let gesture = false;

function watchTouches(e) {

if (e.touches.length === 2) {

gesture = true;

if (e.cancelable !== false) {

e.preventDefault();

}

startGesture(…);

el.addEventListener('touchmove', touchMove);

el.addEventListener('touchend', watchTouches);

el.addEventListener('touchcancel', watchTouches);

} else if (gesture) {

gesture = false;

endGesture(…);

el.removeEventListener('touchmove', touchMove);

el.removeEventListener('touchend', watchTouches);

el.removeEventListener('touchcancel', watchTouches);

}

};

document.addEventListener('touchstart', watchTouches);The touchmove event is the only one using its own separate listener:

function touchMove(e) {

if (e.touches.length === 2) {

doGesture(…);

if (e.cancelable !== false) {

e.preventDefault();

}

}

}Producing the linear transformation

The initial touches that begin a gesture must be stored so we can keep track of how they move throughout the gesture. We take advantage of the fact that TouchList and Touch objects are immutable and just save a reference:

let gesture = false;

let initial_touches;

function watchTouches(e) {

if (e.touches.length === 2) {

gesture = true;

initial_touches = e.touches;

startGesture(…);

…

}

…

}The argument to startGesture is straightforward: we haven't done any gesturing yet, so all parts of the transformation are set to their initial values. The origin of the transformation is the midpoint between the two initial touchpoints:

startGesture({

scale: 1,

rotation: 0,

translation: { x: 0, y: 0 },

origin: midpoint(initial_touches)

});With the midpoint computed as:

function midpoint(touches) {

let [t1, t2] = touches;

return {

x: (t1.clientX + t2.clientX) / 2,

y: (t1.clientY + t2.clientY) / 2

};

}For the doGesture function, we must compare our pair of current touchpoints to the initial ones, and using the distance and angle formed by each pair (for which functions are defined below):

function distance(touches) {

let [t1, t2] = touches;

return Math.hypot(t2.clientX - t1.clientX, t2.clientY - t1.clientY);

}

function angle(touches) {

let [t1, t2] = touches;

let dx = t2.clientX - t1.clientX;

let dy = t2.clientY - t1.clientY;

return Math.atan2(dy, dx) * 180 / Math.PI;

}We can produce the argument to doGesture:

let mp_init = midpoint(initial_touches);

let mp_curr = midpoint(e.touches);

doGesture({

scale: distance(e.touches) / distance(initial_touches),

rotation: angle(e.touches) - angle(initial_touches),

translation: {

x: mp_curr.x - mp_init.x,

y: mp_curr.y - mp_init.y

},

origin: mp_init

});Finally, let's tackle the argument to endGesture. It can't be computed on the spot, since at the moment when endGesture gets called, we explicitly don't have two touchpoints available anymore. We must keep track of the last gesture we have produced and send that to the endGesture function. The gesture variable, which up until this point held a boolean value, sounds like a good place to do it.

Expand the section below to see how the watchTouches and touchMove functions look with everything put together.

Full listing for the touch events code

let gesture = null;

let initial_touches;

function watchTouches(e) {

if (e.touches.length === 2) {

initial_touches = e.touches;

gesture = {

scale: 1,

rotation: 0,

translation: { x: 0, y: 0 },

origin: midpoint(initial_touches)

};

if (e.type === 'touchstart') {

e.preventDefault();

}

startGesture(gesture);

container.addEventListener('touchmove', touchMove, { passive: false });

container.addEventListener('touchend', watchTouches);

container.addEventListener('touchcancel', watchTouches);

} else if (gesture) {

endGesture(gesture);

gesture = null;

container.removeEventListener('touchmove', touchMove);

container.removeEventListener('touchend', watchTouches);

container.removeEventListener('touchcancel', watchTouches);

}

}

el.addEventListener('touchstart', watchTouches);

function touchMove(e) {

if (e.touches.length === 2) {

let mp_init = midpoint(initial_touches);

let mp_curr = midpoint(e.touches);

gesture = {

scale: distance(e.touches) / distance(initial_touches),

rotation: angle(e.touches) - angle(initial_touches),

translation: {

x: mp_curr.x - mp_init.x,

y: mp_curr.y - mp_init.y

},

origin: mp_init

};

doGesture(gesture);

e.preventDefault();

}

}Safari mobile: touch or gesture events?

Safari mobile (iOS and iPadOS) is the only browser that has support for both GestureEvent and TouchEvent, so which one should we choose for handling two-finger gestures?

On the one hand, enhancements Safari applies to GestureEvents makes them feel smoother; on the other hand, TouchEvents afford capturing the translation aspect of the gesture. We can't have both… can we?

Surprisingly, we can. Safari's implementation of TouchEvent contains the same scale and rotation properties as the equivalent GestureEvent, if we ever want to use the browser's, rather than our own manually computed, values.

A check for the existence of the two interfaces lets us restrict gesture events to desktop Safari:

/*

Only attach GestureEvent listeners on macOS Safari.

*/

if (window.GestureEvent !== undefined && window.TouchEvent === undefined) {

container.addEventListener('gesturestart', …);

container.addEventListener('gesturechange', …);

container.addEventListener('gestureend', …);

}Applying the transformation

The final piece of the puzzle has us actually applying the transformation to an element. We we talk about transforming elements on a web page, we generally mean either an HTML or a SVG element. The techniques are similar, but the two languages diverge in some aspects.

From gesture to DOMMatrix

We can express the gesture as a transformation matrix, which uses the DOMMatrix interface:

function gestureToMatrix(gesture) {

return new DOMMatrix()

.translate(gesture.translation.x || 0, gesture.translation.y || 0)

.rotate(gesture.rotation || 0)

.scale(gesture.scale || 1);

}To apply the DOM matrix to an HTML element, we simply add it to the element's style on the transform property. On SVG elements we can similarly set the element's transform attribute:

function applyMatrix(el, matrix) {

if (el instanceof HTMLElement) {

el.style.transform = matrix;

return;

}

if (el instanceof SVGElement) {

el.setAttribute('transform', matrix);

return;

}

throw new Error('Expected HTML or SVG element');

}Applying the gesture origin

The origin of the transformation for an element can be configured via the transform-origin CSS property or its equivalent SVG attribute. But we can actually factor it right into our transformation. The CSS Transforms Module Level 1 states:

The transformation matrix is computed from the

transformandtransform-originproperties as follows:

- Start with the identity matrix.

- Translate by the computed X and Y of

transform-origin.- Multiply by each of the transform functions in

transformproperty from left to right.- Translate by the negated computed X and Y values of

transform-origin.

We can update the gestureToMatrix() function to reference an origin:

function gestureToMatrix(gesture, origin) {

return new DOMMatrix()

.translate(origin.x, origin.y)

.translate(gesture.translation.x || 0, gesture.translation.y || 0)

.rotate(gesture.rotation || 0)

.scale(gesture.scale || 1)

.translate(-origin.x, -origin.y);

}You may be wondering why we are sending in origin as a second argument to the gestureToMatrix() function instead of just reading it off the gesture object. The reason for this is that the origin of the transformation is fixed at the midpoint of the initial touchpoints.

The origin doesn't change with each doGesture(). We compute it once, at the beginning of the gesture.

For HTML elements, we need to express the origin in relation to the element itself, so we read the element's bounding client rectangle. On the other hand, transformations to a SVG element relate to the element's nearest <svg> container, which establishes its coordinate system. The container's getScreenCTM() returns the matrix that maps from its coordinate system to screen coordinates, so we use its inverse() to express the transformation origin in terms of <svg> coordinates.

The unified getOrigin() function below works for both HTML and SVG elements:

function getOrigin(el, gesture) {

if (el instanceof HTMLElement) {

let rect = el.getBoundingClientRect();

return {

x: gesture.origin.x - rect.x,

y: gesture.origin.y - rect.y

}

}

if (el instanceof SVGElement) {

let matrix = el.ownerSVGElement.getScreenCTM().inverse();

let pt = new DOMPoint(gesture.origin.x, gesture.origin.y);

return pt.matrixTransform(matrix);

}

throw new Error('Expected HTML or SVG element');

};For transforms to work correctly on HTML elements, we need to jump through a couple of more hoops:

- Set the element's origin to the top-left corner with

transform-origin: 0 0. The default is the object's center at50% 50%. - Clear the element's transform at the beginning of each gesture, before reading its

getBoundingClientRect(), to obtain the origin in relation to the original, untransformed position of the element.

These concerns are factored into the final solution below.

Putting it all together

Here's the code to apply the transformation matrix to the object (either HTML or SVG) on the startGesture, doGesture and endGesture callbacks.

Notice how we hold onto the object's initial matrix in init_m to multiply into the current matrix at every step of the gesture.

/*

Older versions of Safari expose transformation matrices

on the `WebKitCSSMatrix` interface instead of `DOMMatrix`

*/

if (!window.DOMMatrix) {

if (window.WebKitCSSMatrix) {

window.DOMMatrix = window.WebKitCSSMatrix;

} else {

throw new Error("Couldn't find a DOM Matrix implementation");

}

}

let origin;

let init_m = new DOMMatrix();

let el = document.querySelector('#target');

/*

HTML elements have their `transform-origin`

set to '50% 50%' by default, we need to

reset it to the top-left corner.

*/

if (el instanceof HTMLElement) {

el.style.transformOrigin = '0 0';

}

function startGesture(gesture) {

if (el instanceof HTMLElement) {

/*

Clear the element's transform so we can

measure its original position wrt. the screen.

(We don't need to restore it because it gets

overwritten by `applyMatrix()` anyways.)

*/

el.style.transform = '';

}

origin = getOrigin(el, gesture);

applyMatrix(el, gestureToMatrix(gesture, origin).multiply(init_m));

}

function doGesture(gesture) {

applyMatrix(el, gestureToMatrix(gesture, origin).multiply(init_m));

};

function endGesture(gesture) {

init_m = gestureToMatrix(gesture, origin).multiply(init_m);

applyMatrix(el, init_m);

};Test this code out in these demos:

Refining the implementation

Since browsers on touchscreen-enabled devices implement several default behaviors for user gestures, any tap on the screen is under scrutiny: will it be part of a double-tap? does the user want to initiate a scroll, or follow the hyperlink underneath the touchpoint?

Disabling some of these default behaviors disambiguates taps. If we don't need double-tap-to-zoom gesture, the browser can make taps work like clicks without introducing artificial delays.

This is the general idea behind the touch-action CSS property. It instructs the browser to only apply some of its default behaviors when the user peforms one- or two-finger gestures: one-finger scrolling, pinch-zooming, or the double-tap to zoom behavior.

Depending on the exact needs of our implementation, we might apply touch-action: pan-x pan-y (which tells the browser to apply one-finger scrolling while ignoring all other gestures), or even touch-action: none to our container, in which case the responsibility is ours to implement all touch interactions. As a rule of thumb for touch-action, only disable the browser behaviors you plan to replace with custom gestures.

Some applications may benefit from a bit more nuance. For example, as gestures tend to be imprecise, we might impose thresholds that the various components of the transformation must reach to be considered intentional. Alternatively, we might tease out, and apply, only the most prominent component of the transformation, while ignoring the rest.

I hope this article has made the basic approach clear enough that these refinements can be neatly layered on top of it. You may find some pointers in the Questions & Answers section.

Conclusion

In this article we've looked at how we can treat DOM GestureEvent, WheelEvent, and TouchEvent uniformly to add support for two-finger gestures to web pages with some pretty good results across a variety of devices.

Some quick links:

- DOM gesture logger

- Reference JavaScript implementation

- Demo: Gestures on an HTML element

- Demo: Gestures on a SVG element

- Demo: Combined gestures and zoom buttons on an HTML element

This supplemental material is also published on GitHub in the danburzo/ok-zoomer repository.

Questions & Answers

How do I find the element's current scale?

To know the element's scale at any given moment, we need to either:

- keep track of all the gestures we've applied and accumulate the scales in a variable, or

- somehow extract the scale from the element's current transformation matrix.

The DOMMatrix interface doesn't have a method to retrieve individual components such as scale, translation, or rotation from a matrix, so we have to implement it ourselves.

To that end we can use the algorithm described in Spencer W. Thomas's article Decomposing a matrix into simple transformations, published in Graphics Gems II (1991). A pseudo-code version is published in the CSS Transforms specification, and a C implementation is available on GitHub.

The algorithm handles cases that don't apply to our situation — non-uniform scaling, skewing, etc. — so if we're only interested in extracting the uniform scale, we can get away with a one-liner:

const getScaleFromMatrix = matrix => Math.hypot(matrix.a, matrix.b);And if you are interested in the general method, you can find some JavaScript implementations on GitHub.

Knowing the current scale is useful in a variety of cases:

Zooming to a specific scale. To produce an absolute scale, such as 50% or 400%, we can express it as relative to the current scale and create a gesture for it:

let current_scale = getScaleFromMatrix(current_transform_matrix);

let gesture = {

scale: 0.5 / current_scale, // 50% scale

// …

};

// apply gestureLimiting the zoom level to a minimum/maximum. Similarly to zooming to an absolute scale, we can clamp our scale to a minimum and maximum. The function below limits the scale in a gesture so that the resulting scale is in the 25–400% interval:

function clampScale(gesture) {

let s = getScaleFromMatrix(m);

let proposed_scale = gesture.scale * s;

if (proposed_scale > 4 || proposed_scale < 0.25) {

return {

...gesture,

scale: Math.max(0.25, Math.min(4, proposed_scale)) / s

};

}

return gesture;

}You can see both these examples in action in Demo: Combined gestures and zoom buttons on an HTML element.

Revisions

Nov 22, 2020: Published a first draft of this article on dev.to.

Nov 15, 2021: Updated the Safari gesture events section to note that Safari 15 now also produces wheel events for pinch-zoom gestures, in line with the other major browsers, as well as to point out the double-gestureend bug.

Jan 10, 2022: Fixed some typos, improved wheel-to-gesture code, and added a Questions & Answers section.

May 19, 2022: Fixed the SVG case in applyMatrix() for Chrome, which doesn't currently support a DOMMatrix argument to SVGTransformList.createSVGTransformFromMatrix(). (Chromium#835431)

Thanks to Arne, Stephane and Sam for helping improve this article.