How I digitize books

One of my current passions is to publish interesting public domain Romanian books on llll.ro. The website doesn't just collect texts already available the web: the idea is to work with the primary sources to provide transcriptions that are faithful to the originals.

In its journey from paper to HTML, the material goes through a few steps; some are automated, others still rely on manual work. To ensure quality the worflow still needs a final pair of eyes, but we want as little grunt work as possible. This article describes the workflow on which I've settled (for now) to bring books online.

In short, the process entails:

- photographing the pages;

- performing Optical Character Recognition (OCR) on them;

- cleaning up and formatting the text.

Image acquisition

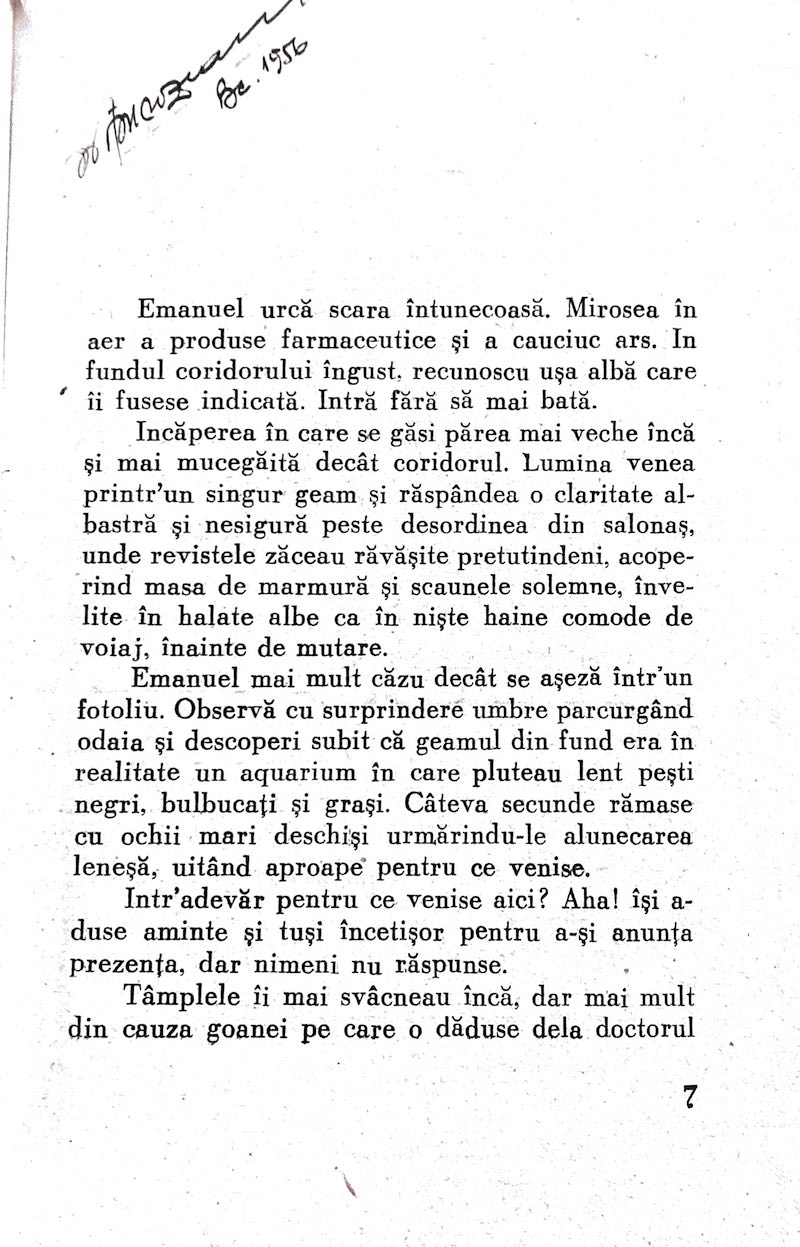

The first step is to capture clear images of each page. While OCR can work on plain camera photographs, a bit of pre-processing can get you more accurate results. Books tend to have hundreds of pages, so every variation in the ergonomics of this step has significant cumulative effects.

I use the (free) Scannable app on iOS to capture, crop, and perspective-correct the pages all in one go. When I'm happy with the crops, I use Send → Share → AirDrop to transfer the set of images to my computer — just make sure the selected format is PNG, not PDF.

Optical character recognition

Next, we put the images to good use. For OCR to be truly useful, it has to be very accurate; otherwise, it's quicker and less frustrating to transcribe everything by hand than to fix an Unicode character soup.

For the kinds of texts I'm digitizing — Romanian, possibly using obsolete othography and diacritical marks — the offline OCR tools I've tested do a lukewarm job. Online services, for the most part, aren't much better, with one exception: Google's Vision API provides near-flawless results. It's a paid, but cheap, service: at the time of writing, the first 1000 images are free, after which you pay around $1.5 per 1000 images.

I wrote a little web tool called Vizor to interact with the Vision API. After you prepare an API key (remember to enable billing for it to work!) you simply drag-and-drop a set of images onto it and get plain text in return.

The API's JSON response the text is segmented in a hierarchy of Pages, Blocks, Paragraphs, Words and Symbols. Vizor stitches them together according to your needs: you can either preserve the line breaks (Verse), or obtain nice, flowing paragraphs (Prose).

Cleaning up the text

I bring the plain text into Sublime Text and run it through a series of checks I've written down. These are a series of regular expressions (regexes) that I plop into Sublime Text's search field — be sure to enable the regular expression search, and the case (in)sensitivity as needed — to bring out possible problems: the wrong diacritical marks, characters outside of the expected ranges, and so on.

Then come the manual bits. I mark up the headings, lists, and any bold/italic sections in Markdown syntax. To spell check, I grab the text and paste it into the Pages app, and use its spellchecking tools to identify any typos. The last pass involves eyeballing the entire text to identify any issues I missed with the automated checks. To do this, I produce a PDF or EPUB file from the HTML using percollate:

# Produce a PDF file:

percollate pdf /path/to/doc.html

# Produce an EPUB file:

percollate epub /path/to/doc.htmlI then load the file on my iPad and skim it for anything out of the ordinary. Any error I spot I highlight or otherwise annotate right inside the app, so they're easy to review at the end.

This process allows me to produce high-quality hypertext out of a moderately-sized book in a few evenings' work, with proofreading taking the bulk of the time.